No Evidence That Jet Stream Waviness, Atlantic Ocean Overturning Becoming More Unstable

/Despite the predictions of computer climate models that both atmospheric and ocean currents will weaken in a warming world, two recent papers (here and here) have found just the opposite.

Waviness in the polar jet stream, which is currently on the rise and plays a role in outbreaks of extreme winter cold in the Northern Hemisphere, is not as strong as it was in the 1940s, 1960s and 1980s, state three U.S. earth scientists in one paper. And a separate study concludes that the AMOC (Atlantic Meridional Overturning Circulation) has not declined for the past 55 years.

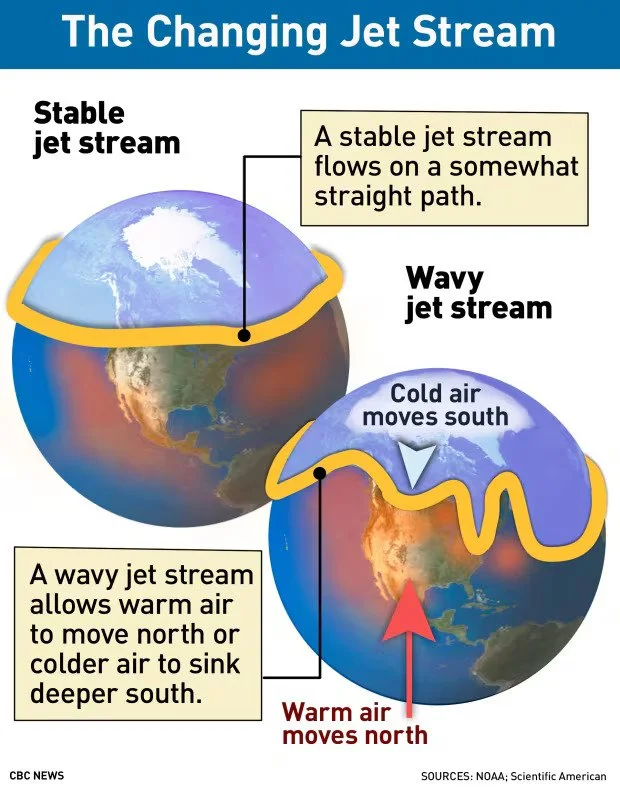

The jet stream is a narrow, high-altitude air current that flows rapidly from west to east in each hemisphere and governs much of our weather. It separates cold air toward the North or South Pole and warmer air on the equatorial side.

Inherently wavy due to the earth’s rotation and the temperature difference between low and high latitudes, the jet stream in winter becomes even wavier than normal. This leads to phenomena such as the well-known polar vortex which, in northern climes, causes long troughs of frigid air to descend from the Arctic.

Exceptionally large jet stream waves since the 1990s have prompted many climatologists to ascribe the behavior to global warming. But the three earth scientists say that this apparent volatility is not so unusual, and that the jet stream has undergone sporadic periods of enhanced waviness since before climate change was considered to be significant.

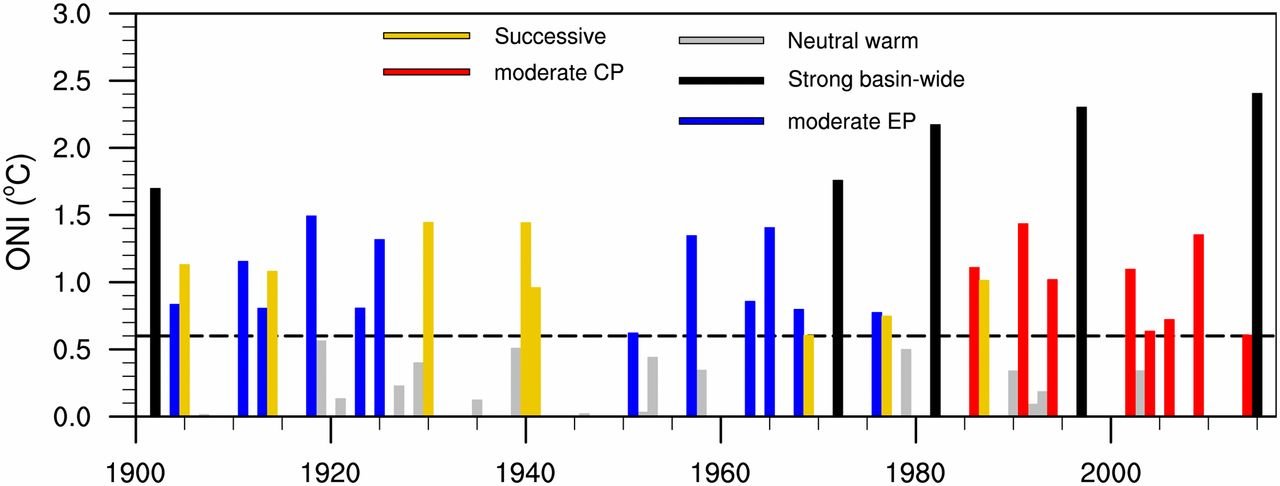

What the researchers did was to compile a record of the jet stream’s wintertime variability going back to 1901, by using AI to analyze long‐term climate data. Most previous studies concentrated only on the post-satellite era since 1979.

To their surprise, the analysis revealed that jet stream waviness from the 1940s to the 1980s surpassed modern waviness levels. So it’s highly unlikely that climate change has any influence on waviness.

The researchers also found that an extra strong wavy period lasting from the early 1960s to the 1980s, shown in the figure below, was the main driver of the “warming hole,” a prolonged period of abnormally cold winter temperatures in the U.S. that caused average winter temperatures to drop more than 1.3 degrees Celsius (2.3 degrees Fahrenheit). Their study estimates that pronounced jet stream waviness was responsible for two thirds of warming hole cooling between 1958 and 1988.

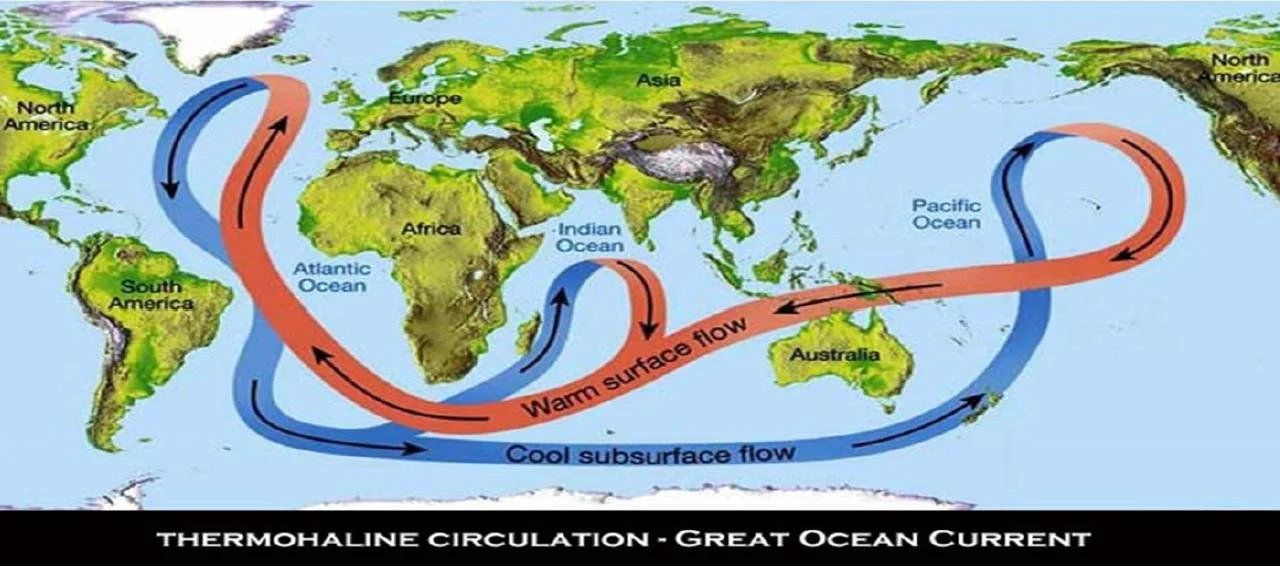

The AMOC, illustrated below, forms part of the ocean conveyor belt that redistributes seawater and heat around the globe. Exactly how global warming is changing the AMOC, if at all, is currently unknown. Alarmist proclamations in both the scientific literature and the mainstream media have suggested there are signs of the AMOC slowing, or even reaching a tipping point and shutting down altogether.

Proxies that have been employed to reconstruct the past strength of the AMOC include sea surface temperatures, ocean density and salinity, 18O isotopes in sediment cores, and corals. Reconstructions based on several of these proxies have estimated declines in AMOC strength of up to 15% (see, for example, here and here).

But the new study finds no evidence of any slowdown in the AMOC since the mid-20th century, which is when the majority of today’s global warming began. The study’s multinational authors explain the discrepancy with earlier studies by pointing to the reliance of most such studies on sea surface temperature measurements. However, say the authors, sea surface temperature is not a reliable proxy for reconstructing the AMOC.

Instead, the new study focused on anomalies in air-sea heat fluxes in the North Atlantic Ocean, at latitudes between 26.5oN and 50oN. The air-sea heat flux is the amount of heat released from the ocean to the atmosphere; more heat is released when the AMOC is stronger.

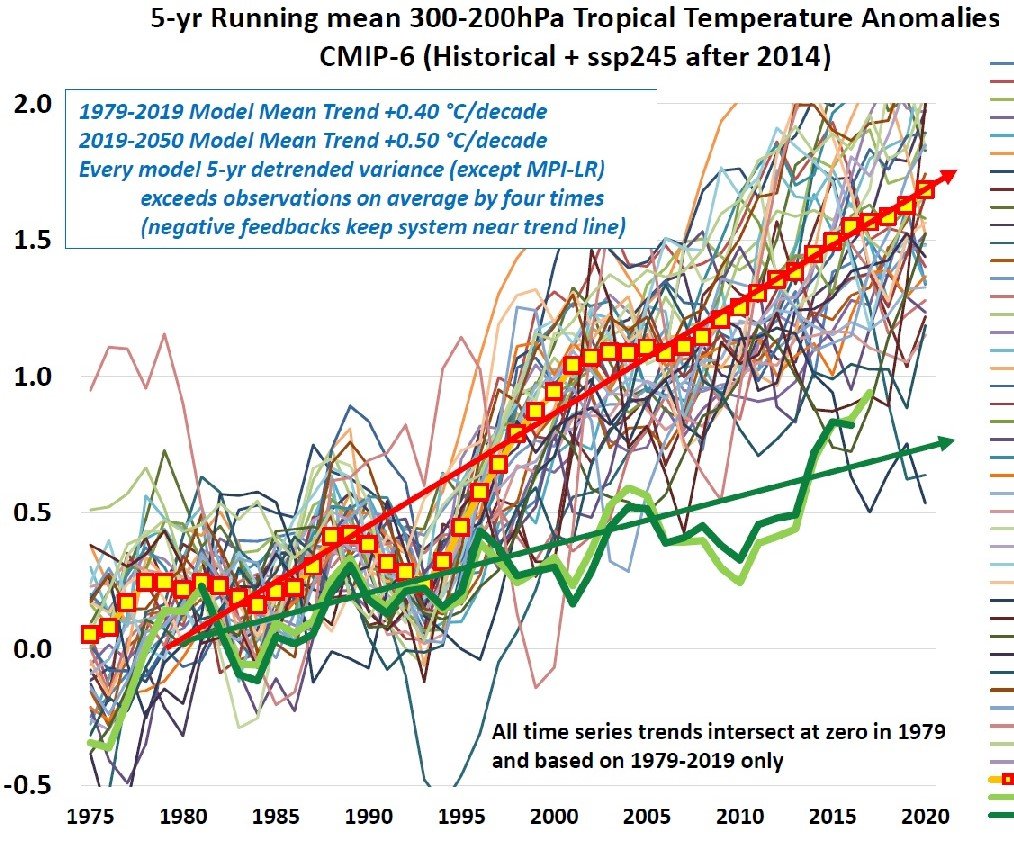

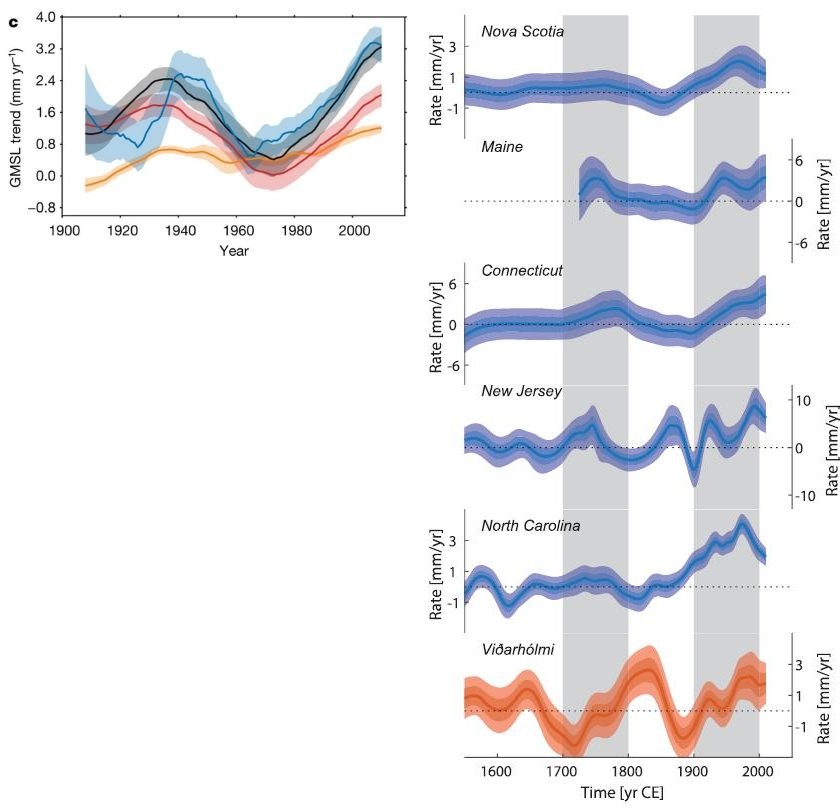

The study authors used 24 different CMIP6 climate models to reconstruct the AMOC, by identifying a relationship between any change in the AMOC at a given latitude and the corresponding change in the air-sea heat flux north of that latitude in the North Atlantic basin. The adjacent figure presents some of their results, showing estimated changes in the AMOC from 1963 to 2017 at four different latitudes. The ERA5 and JRA-55 are different climate datasets.

No sign of any decline in the AMOC is seen during the 55-year period of the study, over the whole latitude range.

Additionally, the researchers found that when they looked for a relationship between AMOC changes and sea surface temperature in the same region, the trend was “not significantly different from zero” if they used the 24 CMIP6 climate models utilized in their own work. Many earlier studies that incorrectly predicted a weakened AMOC used now outdated CMIP5 models.

Next: Interloper Speeding Through Solar System Is Interstellar Comet, Not Alien Spacecraft