Challenges to the CO2 Global Warming Hypothesis: (13) Global Warming Entirely from Declining Planetary Albedo

/One of my early posts in this series on challenges to the CO2 global warming hypothesis featured a paper claiming that the greenhouse effect doesn’t exist. Now the same authors appear to have sidestepped that claim in proposing instead that current global warming can be explained entirely by the observed decrease in planetary albedo over the last few decades.

In a new paper, U.S. research scientists Ned Nikolov and Karl Zeller compare empirical observations of albedo made by CERES (Clouds and the Earth’s Radiant Energy System) satellites to the output of a semiempirical mathematical model used in their earlier work. In their model, the radiative effects integral to the greenhouse effect are replaced by a thermodynamic relationship between air temperature, solar heating and atmospheric pressure, analogous to compression heating of the atmosphere.

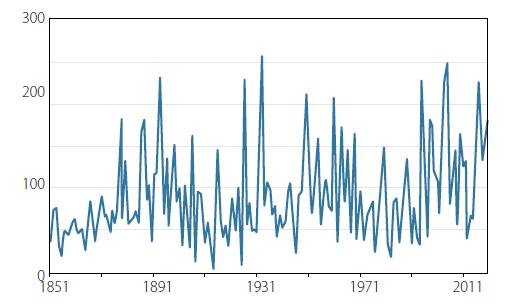

Nikolov and Zeller conclude that the observed decrease of planetary albedo, together with variations of solar output or TSI (total solar irradiance), account for 100% of the global warming trend as well as 83% of the interannual variability in global surface air temperature. They find that temperature changes are driven primarily by albedo changes, with only a marginal contribution from TSI.

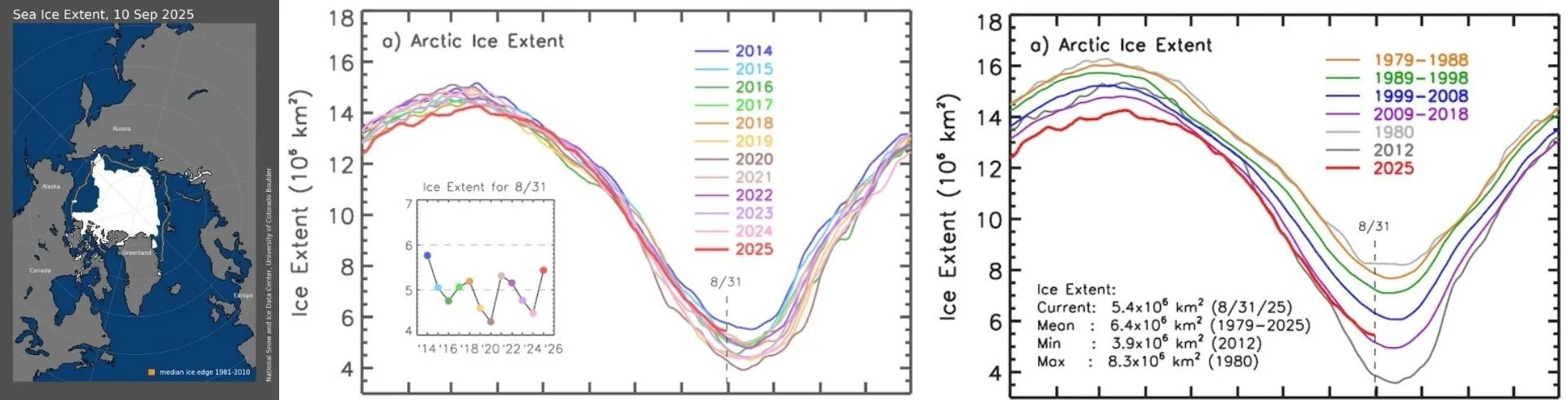

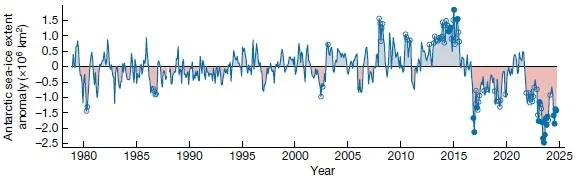

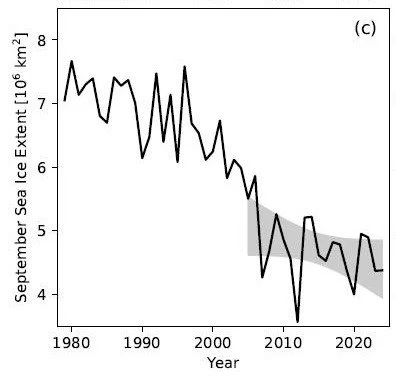

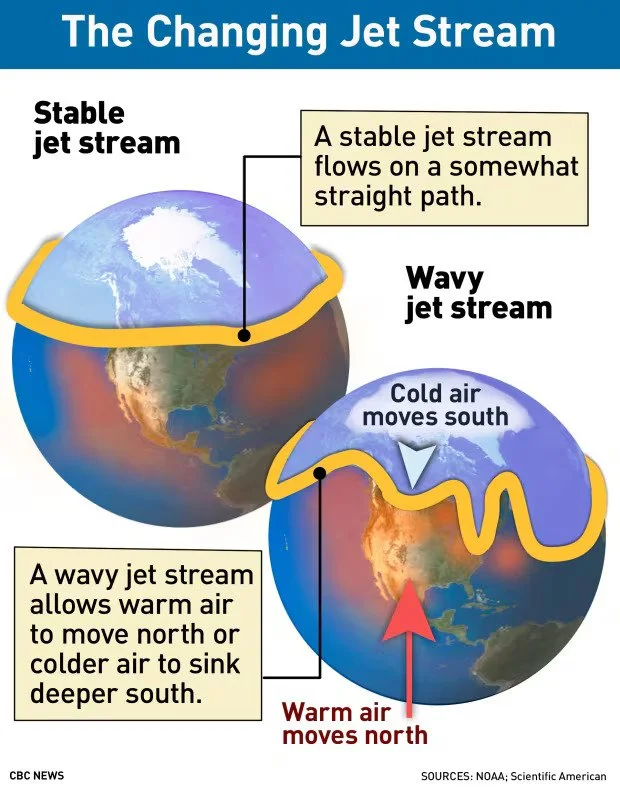

Albedo is a measure of the earth’s ability to reflect incoming solar radiation. Melting of light-colored snow and sea ice due to warming exposes darker surfaces such as soil, rock and seawater, which have lower albedo. The less reflective surfaces absorb more of the sun’s radiation and thus push temperatures higher.

The same effect occurs with low-level clouds, which are the majority of the planet’s cloud cover. Low-level clouds such as cumulus and stratus clouds are thick enough to reflect 30-60% of the sun’s radiation that strikes them back into space, so they act like a parasol and normally cool the earth’s surface. But less cloud cover lowers albedo and therefore results in warming.

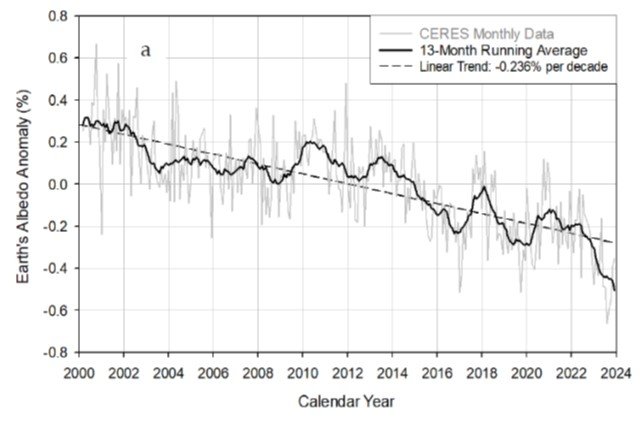

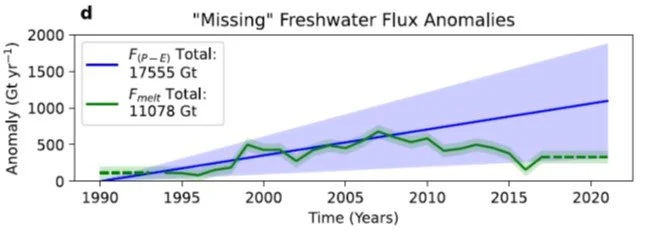

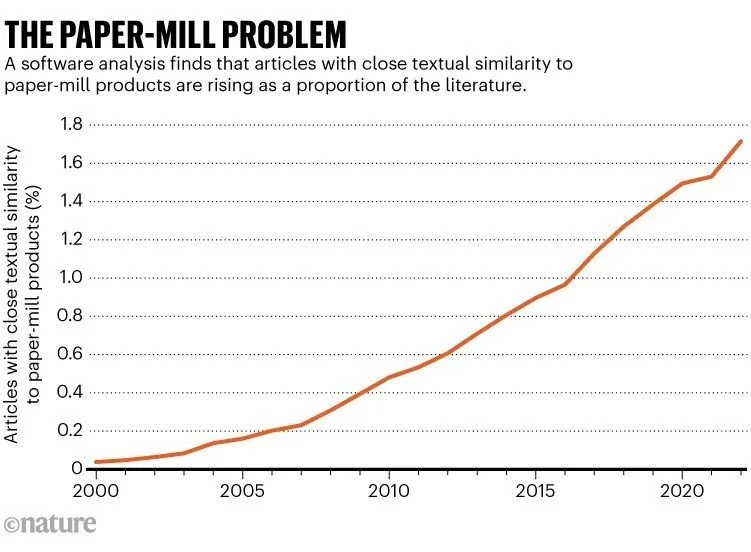

The figure below shows CERES measurements of global albedo from 2000 to 2024, expressed as a percentage anomaly of the 2001 to 2022 mean. The albedo decrease since 2000 is approximately 0.79%, which implies an increase in absorbed shortwave solar radiation of 2.7 watts per square meter over that period. The two researchers point out that this absorption is very close to the total anthropogenic forcing of 2.72 watts per square meter from 1750 to 2019 estimated by the IPCC (Intergovernmental Panel on Climate Change) in its AR6 (Sixth Assessment Report).

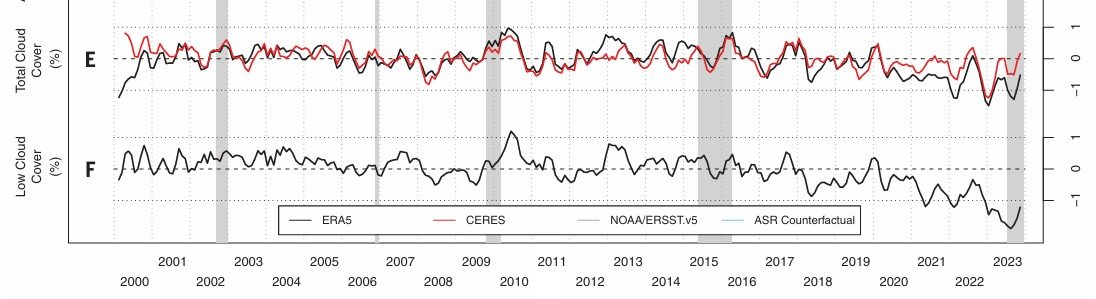

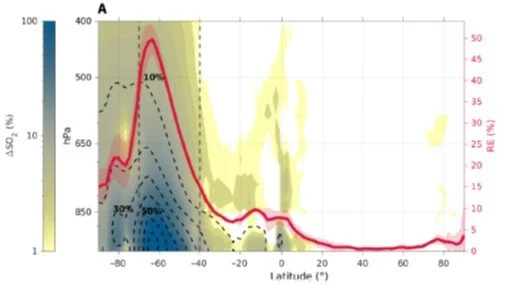

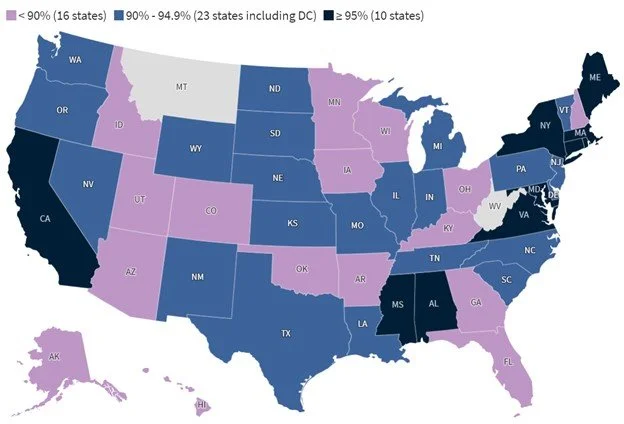

The decline in albedo corresponds to a similar reduction in low cloud cover studied by a trio of German environmental scientists, which I discussed in a 2025 post. Their data for total (red curve) and low cloud (lower black curve) cover, calculated by the study authors from two separate sets of satellite data, is presented in the next figure. The lowered cloud cover is most prominent in northern mid-latitudes and the tropics.

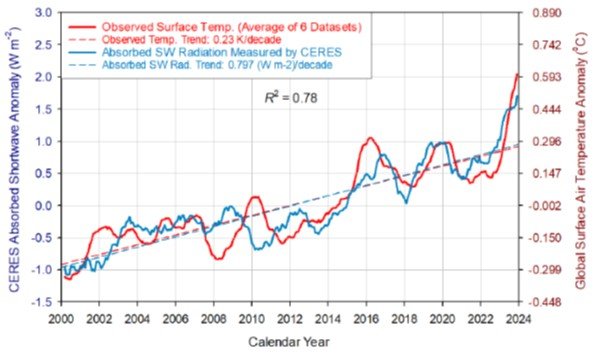

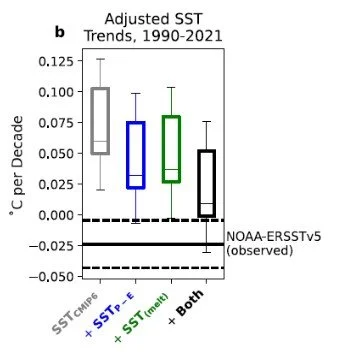

The next figure shows CERES measurements of absorbed shortwave radiation from 2000 to 2024 along with global surface air temperature, measured as the average of six temperature datasets (HadCRUT5, GISTEMP4, NOAA Global, BEST, RSS and NOAA STAR). The temperature anomaly tracks changes in the absorbed solar flux with an average lag of approximately 4 months. Nikolov and Zeller say this close empirical relationship suggests that temperature is directly controlled by the absorbed solar flux.

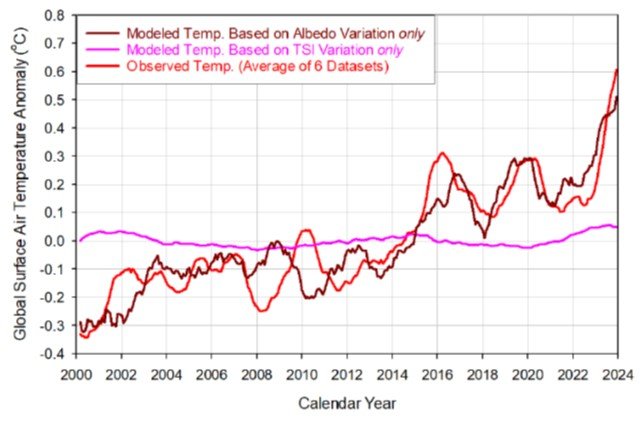

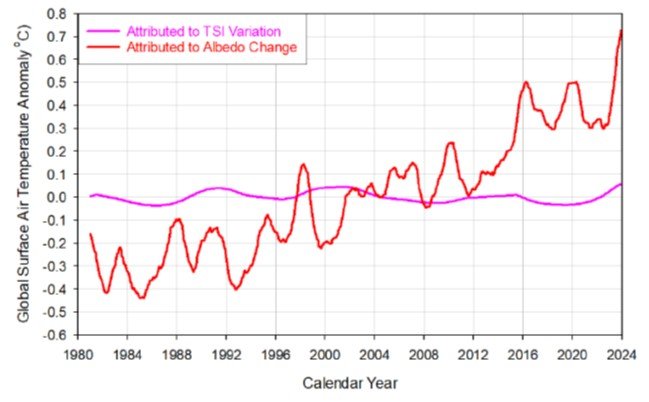

The researchers quantify the individual effects of albedo and TSI on surface temperatures by using their semiempirical mathematical model. The following figure compares modeled temperatures based on each of these two factors with observations. It’s clear that variations in TSI play only a minimal role while variations in albedo dominate the temperature trend.

This conclusion is also supported by the next figure, which depicts the modeled contribution to albedo and TSI separately going back to 1981. According to Nikolov and Zeller’s model, the low contribution from TSI variation indicates that the earth’s climate is 5.6 times more sensitive to changes in solar absorption than to TSI variations.

The estimate is in good agreement with a conclusion reached by Israeli physicist Nir Shaviv, who has investigated the effect of TSI cycles on several climatic variables. Shaviv found that total solar forcing of the earth’s climate, which includes indirect effects, is 5 to 7 times larger than that associated with direct solar warming. Such a large amplification factor would imply that the climate is much more sensitive to the sun than to CO2. Of the various possible indirect amplification mechanisms, Shaviv favors the influence of cosmic rays on low cloud cover.

Nonetheless, the observation of declining albedo or low cloud cover begs the chicken-and-egg question: is decreased albedo the cause or effect of current warming? While Nikolov and Zeller argue for the former, they also call for large-scale interdisciplinary research into the physical mechanisms controlling the earth’s albedo and cloud physics, in order to resolve the question.

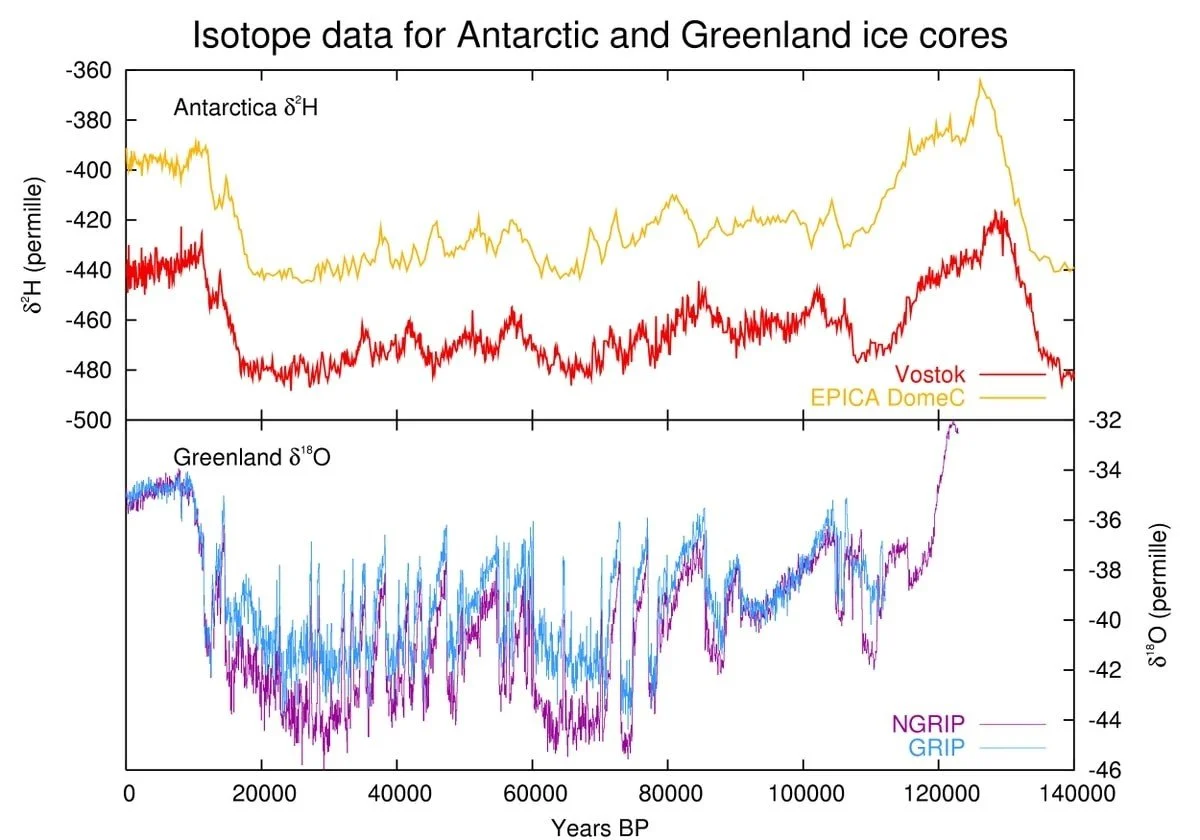

Next: AI Proves Its Mettle in Reconstruction of Antarctic Temperatures