Ice Sheet Update (1): Evidence That Antarctica Is Cooling, Not Warming

/Melting due to climate change of the Antarctic and Greenland ice sheets has led to widespread panic about the future impact of global warming. But, as we’ll see in this and a subsequent post, Antarctica may not be warming overall, while the rate of ice loss in Greenland has slowed recently.

The kilometers-thick Antarctic ice sheet contains about 90% of the world’s freshwater ice and would raise global sea levels by about 60 meters (200 feet) were it to melt completely. The Sixth Assessment Report of the UN’s IPCC (Intergovernmental Panel on Climate Change) maintains with high confidence that, between 2006 and 2018, melting of the Antarctic ice sheet was causing sea levels to rise by 0.37 mm (15 thousandths of an inch) per year, contributing about 10% of the global total.

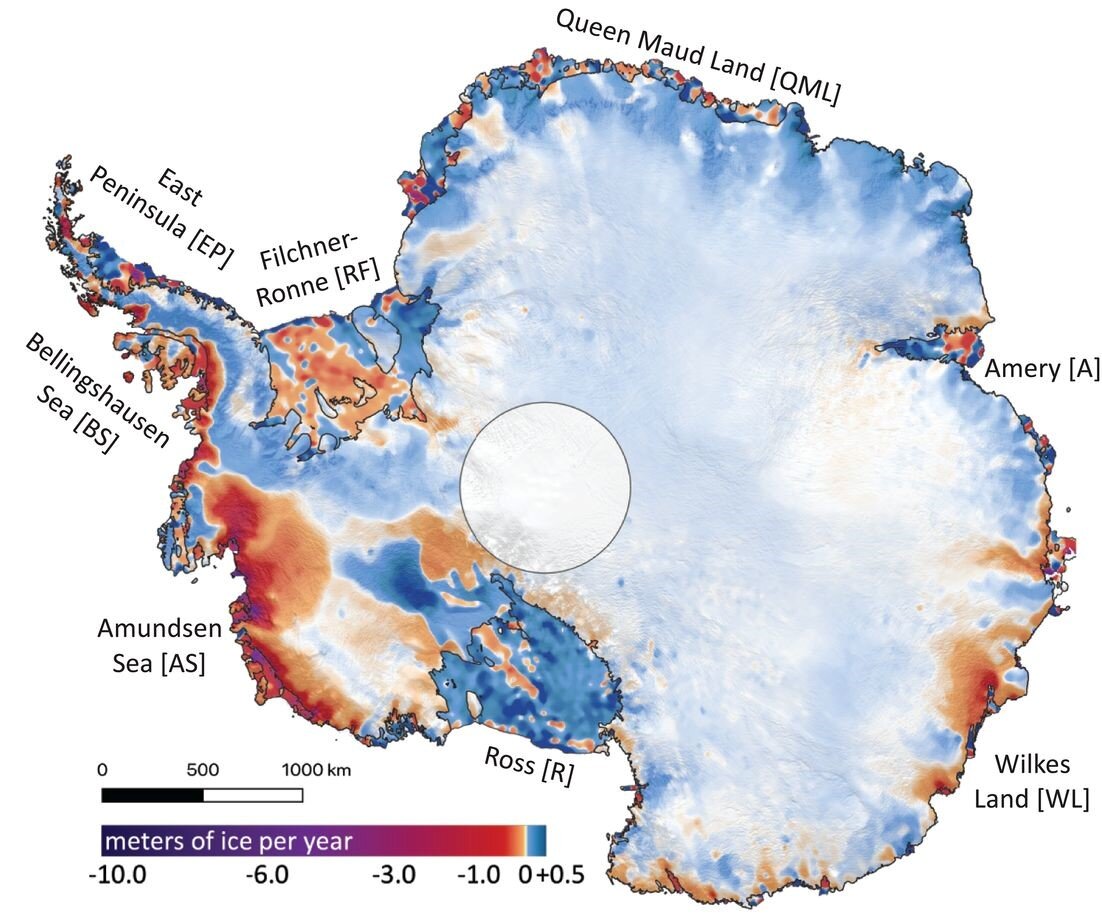

By far the largest region is East Antarctica, which covers two thirds of the continent as seen in the figure below and holds nine times as much ice by volume as West Antarctica. The hype about imminent collapse of the Antarctic ice sheet is based on rapid melting of the glaciers in West Antarctica; the glaciers contribute an estimated 63% (see here) to 73% (here) of the annual Antarctic ice loss. East Antarctica, on the other hand, may not have shed any mass at all – and may even have gained slightly – over the last three decades, due to the formation of new ice resulting from enhanced snowfall.

The influence of global warming on Antarctica is uncertain. In an earlier post, I reported the results of a 2014 research study that concluded West Antarctica and the small Antarctic Peninsula, which points toward Argentina, had warmed appreciably from 1958 to 2012, but East Antarctica had barely heated up at all over the same period. The warming rates were 0.22 degrees Celsius (0.40 degrees Fahrenheit) and 0.33 degrees Celsius (0.59 degrees Fahrenheit) per decade, for West Antarctica and the Antarctic Peninsula respectively – both faster than the global average.

But a 2021 study reaches very different conclusions, namely that both West Antarctica and East Antarctica cooled between 1979 and 2018, while the Antarctic Peninsula warmed but at a much lower rate than found in the 2014 study. Both studies are based on reanalyses of limited Antarctic temperature data from mostly coastal meteorological stations, in an attempt to interpolate temperatures in the more inaccessible interior regions of the continent.

This later study appears to carry more weight as it incorporates data from 41 stations, whereas the 2014 study includes only 15 stations. The 2021 study concludes that East Antarctica and West Antarctica have cooled since 1979 at rates of 0.70 degrees Celsius (1.3 degrees Fahrenheit) per decade and 0.42 degrees Celsius (0.76 degrees Fahrenheit) per decade, respectively, with the Antarctic Peninsula having warmed at 0.18 degrees Celsius (0.32 degrees Fahrenheit) per decade.

It’s the possible cooling of West Antarctica that’s most significant, because of ice loss from thinning glaciers. Ice loss and gain rates from Antarctica since 2003, measured by NASA’s ICESat satellite, are illustrated in the next figure, in which dark reds and purples show ice loss and blues show gain.

The high loss rates along the coast of West Antarctica have been linked to thinning of the floating ice shelves that terminate glaciers, by so-called circumpolar deep water warmed by climate change. Although disintegration of an ice shelf already floating on the ocean doesn’t raise sea levels, a retreating ice shelf can accelerate the downhill flow of glaciers that feed the shelf. It’s thought this can destabilize the glaciers and the ice sheets behind them.

However, not all the melting of West Antarctic glaciers is due to global warming and the erosion of ice shelves by circumpolar deep water. As I’ve discussed in a previous post, active volcanoes underneath West Antarctica are melting the ice sheet from below. One of these volcanoes is making a major contribution to melting of the Pine Island Glacier, which is adjacent to the Thwaites Glacier in the first figure above and is responsible for about 25% of the continent’s ice loss.

If the Antarctic Peninsula were to cool along with East Antarctica and West Antarctica, the naturally occurring SAM (Southern Annular Mode) – the north-south movement of a belt of strong southern westerly winds surrounding Antarctica – could switch from its present positive phase to negative. A negative SAM would result in less upwelling of circumpolar deep water, thus reducing ice shelf thinning and the associated melting of glaciers.

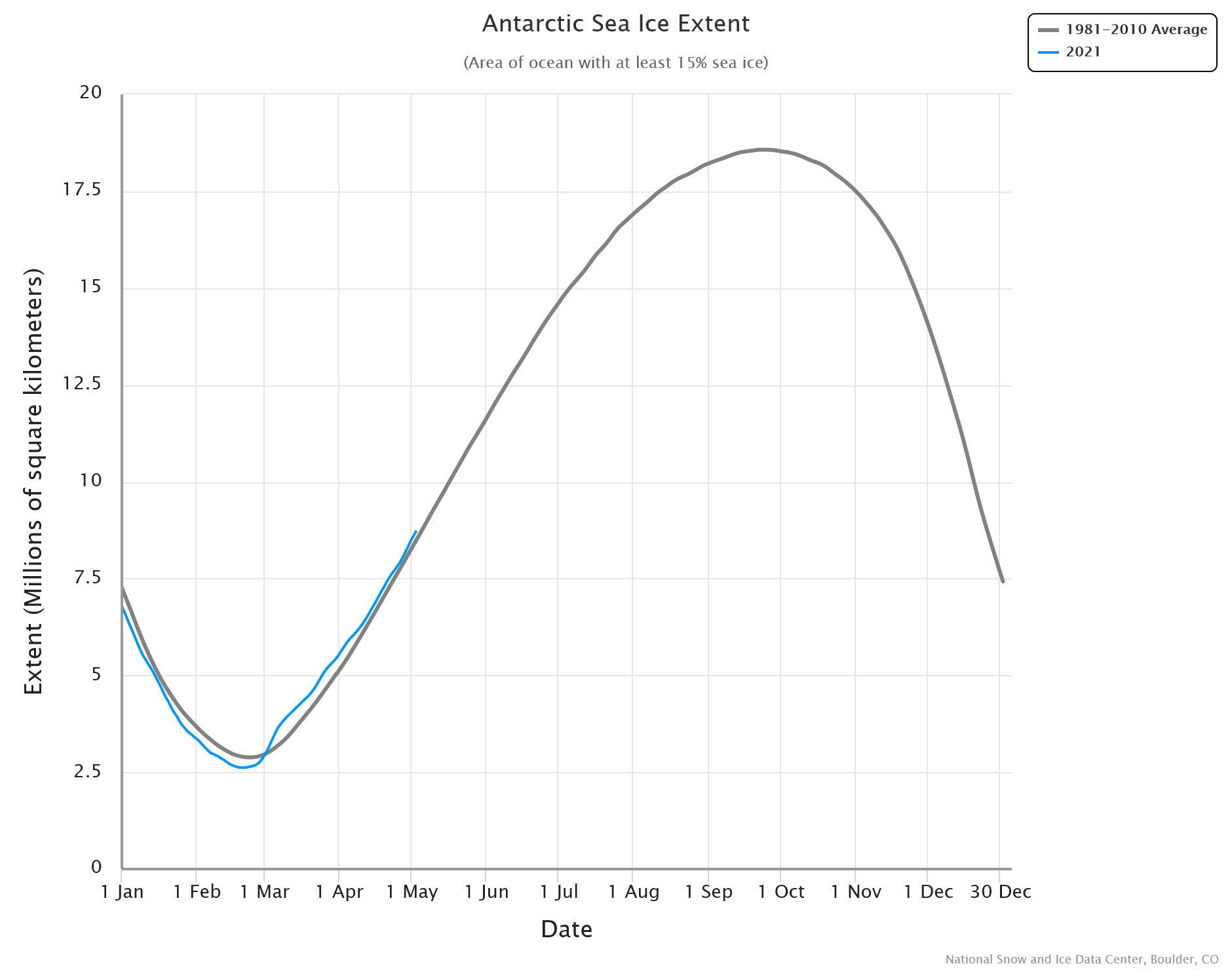

As seen in the following figure, the 2021 study’s reanalysis of Antarctic temperatures shows an essentially flat trend for the Antarctic Peninsula since the late 1990s (red curve); warming occurred only before that time. The same behavior is even evident in the earlier 2014 study, which goes back to 1958. So future cooling of the Antarctic Peninsula is not out of the question. The South Pole in East Antarctica this year experienced its coldest winter on record.

Next: Ice Sheet Update (2): Evidence That Greenland Melting May Have Slowed Down